ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) are two fundamental approaches to preparing data for analytics and business intelligence. They define the order in which raw data is moved, shaped, and stored from source systems into centralized repositories like data warehouses or data lakes.

Understanding the difference between ETL and ELT is key for building scalable and efficient data pipelines especially in cloud-based environments where performance and flexibility matter.

What Is ETL?

ETL is a traditional data integration process where:

- Extract: Data is collected from multiple source systems

- Transform: Data is cleaned, standardized, enriched, and formatted

- Load: The final transformed data is loaded into a data warehouse or database

ETL is commonly used when transformation logic is complex or when systems require high levels of data cleansing before storage.

What Is ELT?

ELT reverses the last two steps of ETL. In this approach:

- Extract: Data is pulled from source systems

- Load: Raw data is quickly loaded into a cloud-based data warehouse or data lake

- Transform: Data is transformed inside the destination system using its compute power (e.g., SQL engines)

ELT is more modern and cloud-friendly. It works best when using platforms like Snowflake, BigQuery, or Databricks that can handle transformation at scale.

ETL vs. ELT: Key Differences

| Feature | ETL | ELT |

|---|---|---|

| Data Flow | Extract → Transform → Load | Extract → Load → Transform |

| When to Transform | Before loading | After loading |

| Storage Target | Data warehouses | Cloud data warehouses or lakes |

| Speed | Slower (transformation is a bottleneck) | Faster (raw data is loaded immediately) |

| Complexity | More upfront data modeling | More flexible, iterative transformations |

| Tool Examples | Talend, Informatica, SSIS | Fivetran, dbt, Matillion, Airbyte |

When to Use ETL

- You have limited storage and only need clean data in the destination

- Your business logic must be applied before data is analyzed

- You work with on-premise systems or traditional BI stacks

When to Use ELT

- You’re using cloud data warehouses with scalable compute

- You want to store raw data for reuse or reprocessing

- You prefer modular, SQL-based transformation (e.g., dbt)

ETL/ELT in a Modern Data Stack

In today’s cloud-first environments, many teams blend both approaches. For example, they may use ELT for most data ingestion but add pre-load transformations for sensitive or high-risk data.

Modern tools like Airbyte, Fivetran, Stitch, and dbt make it easier to automate and maintain both ETL and ELT pipelines with minimal code and robust monitoring.

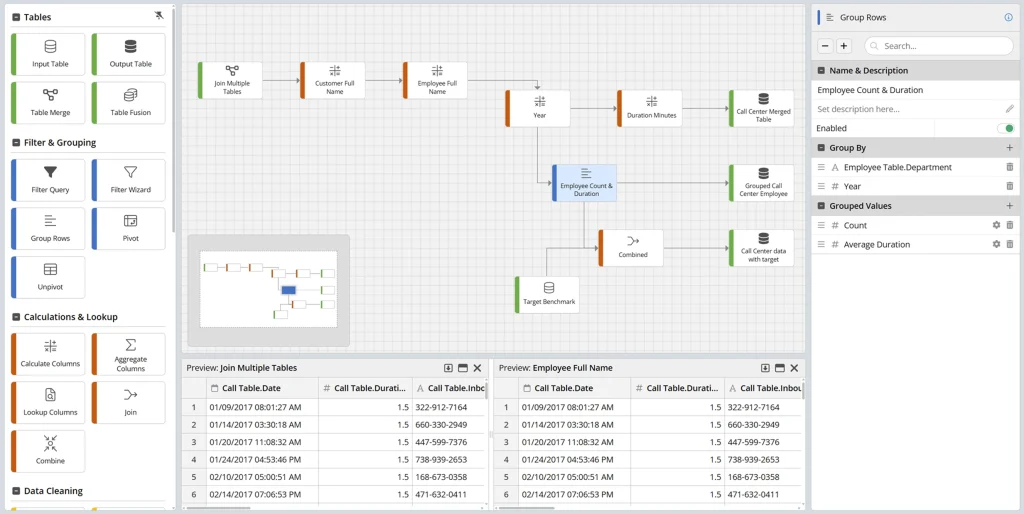

How ClicData Supports ETL and ELT

ClicData provides built-in ETL and ELT capabilities so you can prepare data exactly how you need it — whether you’re transforming before loading or working directly from raw sources.

With ClicData, you can:

- Connect to multiple data sources (cloud apps, files, databases)

- Transform data using formulas, joins, filters, and aggregations

- Load data into your ClicData workspace or connect to external warehouses

- Schedule and automate workflows to refresh dashboards in real time

Whether you need full ETL pipelines or lightweight ELT workflows, ClicData makes it easy to design, run, and monitor data processes, all in a visual, no-code environment.

FAQ ETL and ELT

How do you choose between ETL and ELT for a new data project?

The decision depends on your infrastructure, data volume, and transformation needs. ETL is often better for complex cleaning before storage, while ELT is ideal if you have a scalable cloud warehouse and want to keep raw data for flexibility. Many modern teams use a hybrid approach.

What are common mistakes when implementing ETL pipelines?

A frequent error is hardcoding transformation logic, making pipelines difficult to update. Other issues include not monitoring pipeline health, skipping data validation, or overloading transformations into a single step instead of breaking them into manageable stages.

Can ELT handle sensitive or regulated data securely?

Yes, but security must be built into the process. This includes encrypting data in transit and at rest, applying row-level permissions, and masking sensitive fields before analysts can access them. In some cases, partial ETL is still used to anonymize data before loading it.

How do ETL and ELT impact data freshness in dashboards?

ETL processes may introduce delays because transformations happen before loading, while ELT can update dashboards faster by loading raw data immediately. However, ELT requires transformation scheduling inside the warehouse to keep metrics current. The best choice balances speed and data quality.

How can automation and orchestration tools improve ETL/ELT workflows?

Tools like Airflow, Dagster, or Prefect can schedule, monitor, and recover ETL/ELT jobs automatically. They handle dependencies between tasks, alert teams to failures, and optimize resource usage. For example, you can trigger a transformation only after a new dataset is loaded, reducing costs and ensuring consistency.