Data observability refers to the the ability to monitor, understand, and diagnose the health of data across the pipelines, from ingestion to consumption.

It helps you answer questions such as:

- Is the data fresh?

- Is anything missing or delayed?

- Did values suddenly change?

Unlike data discoverability, observability is about system behavior rather than human usability. It helps data teams detect and resolve data issues before they affect business decisions.

Key Components of Data Observability

- Freshness monitoring: so you have the insurance data arrives when expected. Late or stale data is one of the most common causes of broken dashboards and incorrect insights, especially in operational reporting.

- Volume and completeness checks: these checks monitor whether expected data volumes suddenly spike or drop. For example, a sudden drop in events may indicate a broken integration, or an unexpected spike may indicate data duplication.

- Schema and structure monitoring: data observability tools track changes in column names, data types and table structure. This is critical for preventing silent failures in downstream data transformations and reports.

- Distribution and anomaly detection: data observability goes beyond basic checks and looks for unusual patterns in the data itself like sudden changes in averages and ratios, or unexpected value distribution. With observability, you can rapidly catch upstream logic changes or corrupted data.

- Alerting and incident context: observability only works if issues reach the right people with enough context to act. Your stakeholders need to know immediately the severity level of the incident, what objects are being impacted, and possible root causes. Be aware though, too many alerts quickly lead to fatigue and less reactivity from stakeholders.

Benefits of Data Observability

When data discoverability is done well, the impact goes far beyond convenience.

Faster Decision Making

Your data team spend less time searching for data and validating numbers, while your business teams spend more time analyzing and acting on insights.

Reduced Duplicate Work

Discoverable data prevents data teams from rebuilding the same datasets or metrics in parallel, reducing technical debt.

Increased Trust in Data

Clear data lineage, ownership, and quality indicators make data more trustworthy, which increases adoption across the organization.

Better Collaboration Between Teams

Aligning your data, finance, sales, marketing and leadership teams on the same definitions of the data and its traceability reduce conflicts and the risk of misinterpretation.

Improved Data Governance at Scale

Discoverability supports data governance by making sensitive data visible, classified, and auditable without slowing down access.

How ClicData Supports Data Observability

Data observability is not just about pipelines. For many teams, data issues are first discovered in dashboards, reports, or ad hoc analysis. This is where ClicData fits.

ClicData helps teams observe data at the analytics and BI layer, where data is actually explored and consumed.

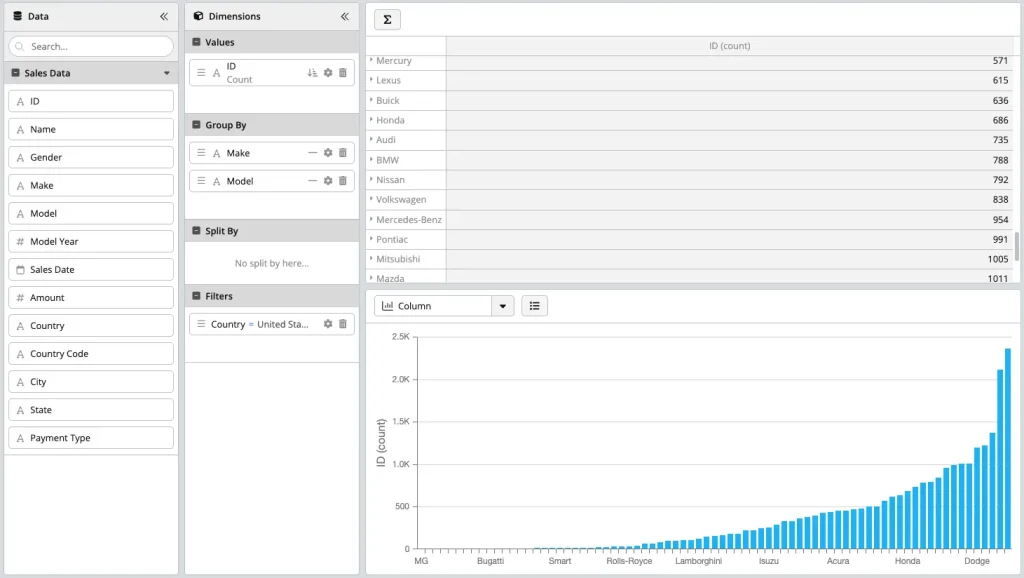

With Insights, you interact directly with datasets using fast filtering, grouping, pivoting, and comparisons. Instead of guessing whether a number is wrong, quickly explore how values behave, compare periods, and surface anomalies before they become reporting issues.

Schema Analysis focuses on understanding the data behind the data. By exposing value distributions, null and empty percentages, and outliers, it helps spot structural issues early, such as incomplete loads, unexpected sparsity, or silent schema changes that break analyses without obvious errors.

Instant Alerts turn data checks into signals. You can define simple or advanced conditions on data freshness, volumes, or business rules, and get notified through email, Slack, SMS, or web services when something changes. You know about any issues, incidents or pipeline execution failures as they happen.

Together, these capabilities make data behavior visible where decisions are made, helping you catch issues earlier, investigate faster, and trust your analytics more, without adding heavy observability infrastructure.

Data Observability FAQ for Data and BI Analysts

Why should I care about data observability as an analyst?

Because you are often the first to be blamed when numbers look wrong. Observability helps you catch issues before they reach dashboards, reducing reactive investigations and credibility loss.

Is data observability only useful for engineers?

No.

While engineers configure many checks, analysts benefit directly from freshness indicators, incident history, and confidence in data reliability.

How does observability reduce time spent debugging reports?

Instead of manually checking sources, you can quickly see whether:

- Data is late

- A schema changed

- An anomaly was detected upstream

This narrows investigation scope immediately.

What is the risk of too many observability alerts?

Alert fatigue. You don’t need to be alerted for every single “issue”, only the ones that will have a real impact on your business.

For example, if you trigger an alert every time a data refresh is a few minutes late, even though the delay is expected during peak hours or weekends. After seeing the same alert over and over with no real impact, you’ll start ignoring it–because you’re human! But when a true data outage happens, the alert will most likely be discarded because it looks like just another false alarm.

Does data observability guarantee correct metrics?

Sorry, no.

Observability ensures data behaves as expected, not that the business logic is correct. Metric definitions, assumptions, and modeling choices still require human judgment and governance.