How do you get a single trusted source of truth from scattered data sources?

For anyone working directly with data, it is likely the data you work with is not made up of a single clean data source but instead consists of multiple sources which are all isolated from one another. There may be data from a web analytics platform, CRM systems, Financial data in spreadsheets as well as in traditional data warehouses.

Each source on its own may be useful but when combined together can generate business value through insights that are otherwise not available. This article lays out a foundation for unifying multiple data points into a single source of truth, then describes some resources available within ClicData for some of the more frequently used sources.

Part 1 : Foundations for Best Practices

1) Confirm sources are required

Data sources should only be ingested if they enable some sort of business value i.e they are important to a decision or metric. If a data source doesn’t satisfy this then there is no point using it. To expand on whether sources are valuable:

- What will the data lead to? Will it help with decision making, answer important questions or provide input to a key metric?

- Eg, Which channels drive profitable new customers by region?

- Be upfront with documentation by maintaining a ‘source contract’ where each field is traced to a use case as well as refresh cadence.

- Prefer systemic sources over manual ones

- Eg, a CRM database table over an emailed spreadsheet

Common pitfalls

- Ingesting data because it’s ‘nice to have’ or it might be helpful later.

- Pulling duplicate versions of the same data (CSV export + API for same table).

- Compliance and regulation

- Eg, Keeping PII that isn’t used creates risk or unnecessary governance overhead.

Definition of done

- Every included source is mapped to a use case that it supports.

- You can remove any non-mapped sources/columns without breaking downstream logic.

2) Combining data sources

Once data sources are deemed valuable, it’s likely that some, if not most, of them will need to be joined or merged in some way. How this is done should be modelled out. Here are some considerations:

- Determine the grain of data upfront. At what level will the data once combined be? Will it be customer level data? Line item from sales? It may be a combination of different grains but this should be decided.

- Consider the keys used for joining data. Use stable natural keys, such as ID level data. If this is not available then define dummy or composite keys

- Eg, email address and signup date.

Common pitfalls

- Joining at mismatched grains (“event” to “daily”) causes incorrect results.

- Changing mapping dictionaries without version control.

Definition of done

- For each integrated data source there are defined keys with a chosen grain documented.

3) Refresh cadence

Sources can be added, combined correctly but very rarely will the results of the data be used as a one off. The more likely scenario is that data will be needed ongoingly especially if it’s relevant for a use case. How often it needs to be updated must match the business tolerance for staleness as well as align with technical capabilities of the source. Some ideas to help guide this are:

- Classify cadence for each source such as Batch or Real time.

- If batch then hourly, weekly, monthly or other?

- Incremental loads with overlap. Extract data that has changed since a specific time,

- Eg, a look back period of 24hrs.

- Orchestrate from the slowest dependency.

- Eg, If the slowest data source updates at 6am then schedule the refresh at 6:15am with retries.

- Establish an SLA

- E.g., Complete by 7am daily & show the last successful run timestamp.

Common pitfalls

- Full reloads are slow and costly and should therefore be avoided or used as a last resort.

- Not building in any contingencies with retries. Eg, a temporary network issue could result in stale data.

Definition of done

- Each source has a declared cadence, window, and ingestion logic.

- The unified job is orchestrated with dependencies and documented SLA’s.

4) Ensure quality such as schema checks

Quality must be built into the process. Validations and checks that are implemented upfront minimizes the risk of low quality data coming through.

- Schema checks: Verify required columns exist with expected data types & build in failsafes/notifications when there is any drift from this.

- Volume & freshness: Row counts are within the expected ranges; the prior day’s data is present.

Common pitfalls

- Skipping quality checks and only relying only on eyeballing dashboards to detect issues.

- Allowing schema drift to auto-add columns.

- Alerting without any context (no dataset name, no run ID, no remediation link).

Definition of done

- Automated checks run with every refresh; failures notify owners with actionable context.

Part 2: Connecting to ClicData

ClicData can natively connect to 500+ connectors as data sources. From spreadsheets saved locally to cloud storage, we will now cover some of the common connectors available and how they can be utilised.

Data Flow

The Data Flow module acts as a visual ETL layer inside ClicData. It lets you bring together multiple datasets—from CRMs, spreadsheets, databases, or cloud apps—and define how they should merge, transform, and enrich each other.

Each step in the flow can include joins, filters, calculated fields, and lookups, all represented visually so you can trace dependencies and debug issues quickly. Once created, a flow can be reused across multiple dashboards or refreshed automatically with your data schedule.

Setup tips

- Start by adding individual sources first, then link them in a flow to handle joins and transformations in one place.

- Use naming conventions for nodes (e.g.,

merge_sales_crmorclean_product_dim) to keep complex flows readable. - Validate intermediate outputs at each step—especially before merges—to ensure field names and data types align.

Files & Storage

This can be used for one-off uploads, Finance spreadsheets, csv exports or ad-hoc files (SharePoint/OneDrive/Google Drive/Azure/AWS).

Setup tips

- Map a named path (e.g., /reports/sales/*.csv) rather than a single filename to allow dynamic files.

- Enable schema validation (column name & type) so accidental column insertions don’t cause any errors.

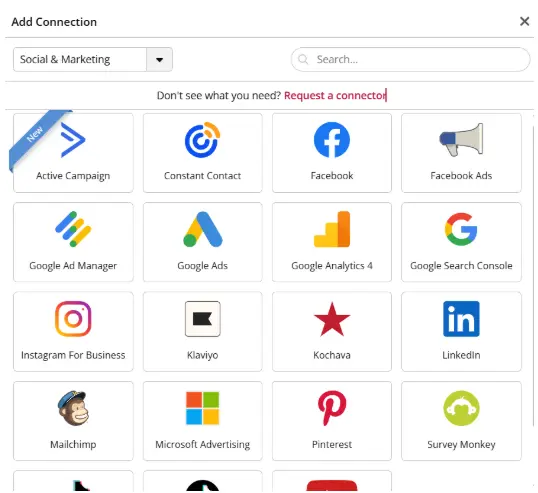

Social & Marketing

Used for measuring campaign performance from platforms such as Google Ads, LinkedIn Ads, Meta, TikTok, Mailchimp & HubSpot Marketing.

Setup tips

- Pull core entities separately (Campaigns, AdGroups/AdSets, Ads/Creatives, Daily Stats) and join on IDs to control grain.

- Backfill for data across an overlapping retention period to capture conversions.

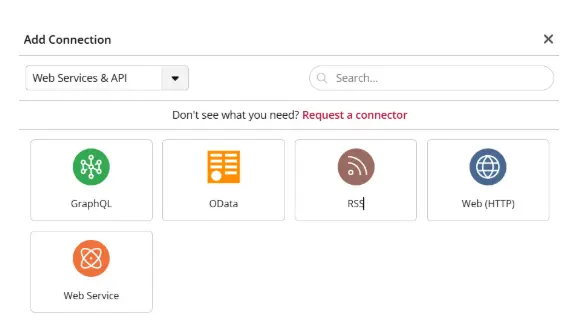

Web Services & API

These connectors can be used to access SaaS that do not have a native connector or microservice.

Setup tips

- Store credentials as secrets instead of hard-coded credentials.

- Log response status for observability.

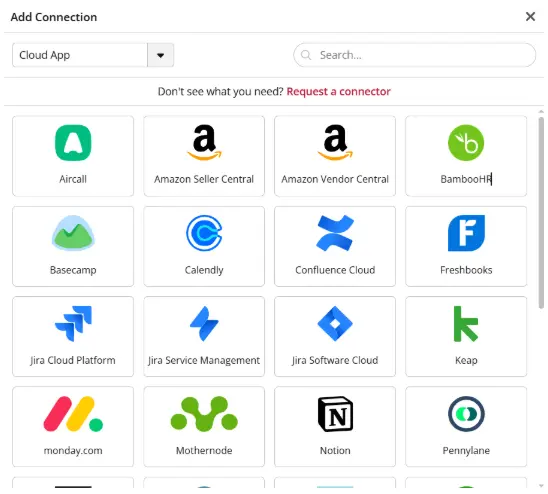

Cloud Applications

Useful for tools such as CRM (Salesforce, Dynamics), Service Desk (Zendesk, ServiceNow), Collaboration (Jira, Confluence), Finance (NetSuite, Xero).

Setup tips

- Read reference tables (users, accounts, products) first; then fact tables (tickets, opportunities, invoices) before joining with stable IDs.

- Handle soft-deletion of data if the app flags records as deleted/archived

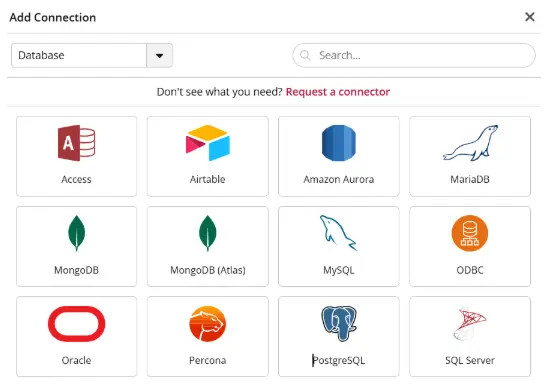

Databases

Whether it’s on-prem or cloud hosted transactional DBs (MySQL, SQL Server, PostgreSQL, Oracle) the schema is set from this conventional source of data.

Setup tips

- Leverage chronological fields (timestamp field) to drive incremental pulls.

- Partition large tables by date or id ranges to parallelize.

- Use a connection broker which allows a single table/query for multiple databases simultaneously.

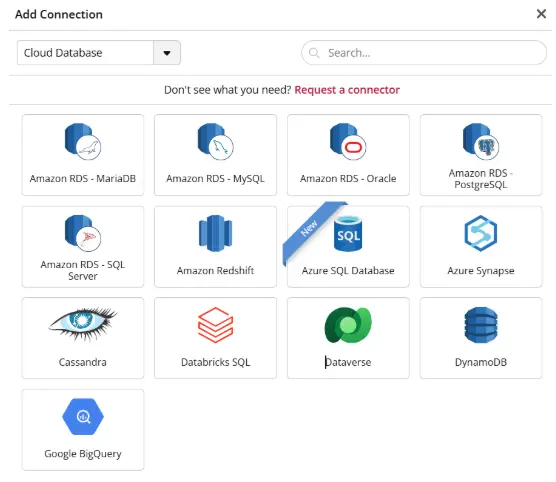

Cloud Database

The modern data stack now means cloud based warehouses are commonly used in practice featuring BigQuery, Snowflake, Redshift or Azure Synapse.

Setup tips

- Use materialized views or table snapshots for commonly accessible data.

- Consider creating semantic layers for better practices.

Final thoughts

Bringing disparate data together isn’t about connecting everything, it’s about connecting the right data, at the right detail and cadence with guardrails that ensure quality. Part 1 provided the foundations which were to confirm a source’s value, define join keys and grain, set freshness SLAs and automating quality. Part 2 covered some of ClicData’s most commonly used connectors to assist turning those foundations into reality.